All the myriad monies in translation!

Approaching the mess of currencies, QA and data for testing

Those who have attending my various workshops and webinars on terminology, translation and localization quality assurance, or regular expressions (regex) know that I like to give examples involving money. And I’ll continue that habit in my upcoming free public lectures on terminology next week (February 20) and the week following (February 27).

Money is presented in texts that we translate in quite a number of different ways. These are structured expressions, within the scope of “terminology” in fact as I define it, but the structures can vary widely, often more than we anticipate.

For all the times I’ve talked about these matters, I’ve never tried to do so comprehensively, because text authors can be all over the place in the ways they present financial figures, prices or other expressions of monetary amount. Within a limited domain, such as corporate annual reports or travel guides from a particular publisher, the various possibilities tend to be limited by the personal preferences of a limited set of authors guided by typical professional presentation habits or style guides, but even then there can be surprises.

In this discussion, I am going to present examples from three sets of German to English translation data, because having engaged in commercial translation from German to English in a number of fields for over twenty years, I have a lot of that kind of information in my archives, and I continue to be drawn in to help with colleagues and service companies active with those (and other) languages. If you or others in your circles work with other languages, my approach can be adapted easily enough to help you achieve more accuracy and efficiency when dealing with monetary figures.

The three data sets I’ll explore in this discussion are:

a somewhat recent corpus of about 2.5 million words of EU legislation,

an archive of several German travel guides for Portugal published by Michael Müller Verlag and used with permission of the owner, and

translation memory excerpts from more than two decades of work by me and a former partner, all information in the public record for a very long time.

Understanding how structured information like monetary expressions (or dates, time information, citations, etc.) occurs in the source language texts you or your associates are tasked with translating can be very helpful to develop strategies for verifying that information’s correct conversion and formatting into desired target language expressions.

The present discussion will relate mostly to how to explore your source text information and identify its recurring patterns. Engaging in such exercises with a suitably large corpus can be very helpful to avoid surprises and omissions of necessary quality checks, and the information collected during these corpus studies can be very helpful in recognizing the need for better style guidelines for authors, for example.

I use information like this to create filtering and search expressions for structured text to verify manually that things are in proper order, also automated quality checks to free my mind’s capacity for reading and evaluating the flow of a translated text and its persuasive qualities rather than tire myself staring at many thousands of words trying to find a decimal comma used where there should have been a decimal point and other such tedious nonsense.

These tasks are common to most modern translation environment tools, and are among their greatest advantages. My tool of choice, memoQ, also features auto-translation rules, which can make a proper target language expression appear “magically” in the Translation results list alongside glossary hits, translation memory and LiveDocs corpus matches, etc. so that these can be inserted accurately with the desired formatting (non-breaking spaces, currency symbol positioning, preferred negative sign characters, etc.) into the translation, or deviations from the specified style can be seen noticed immediately with great accuracy or in later QA checks.

To explore the three corpora for the present discussion, we’re going to use some simple regular expressions. And maybe some that are not so simple. But the good news is that these can be copied and pasted from a curated reference list (memoQ includes a special library tool, the Regex Assistant, for managing such information), so you don’t have to endure the horrors of learning regular expressions despite the frequent assertions by sadistic tech gurus that you ought to.

To get started, we should build some lists. Lists of symbols or codes for the currencies that we want to deal with. I usually deal with US dollars or euros, but I’ve often had to do with Swiss, Russian, Australian or other currencies. You surely have your own list of frequent currencies. So just for a start, I’ll write a quick one to use in my exploration, one with all the possible variations I expect in different texts from different authors. I’ll surely miss some.

EUR, €, USD, $, US$, US Dollar, GBP, britische Pfund, £, RUB, ₽, CHF, Fr., SFr., ₣)

These are markers, or parts thereof, that I might find used in a German text talking about amounts in euros, US dollars, UK pounds, Russian rubles and Swiss francs. Make your own list based on currencies you expect.

Experience has taught me, however, that the elements in that list might be written in different ways. With different capitalization perhaps. Or with periods omitted for those Swiss franc abbreviations. There are a lot of ways of dealing with those possibilities, and since this isn’t a regex lesson, I won’t expound on my choices, just rewrite the list based on my experience, rules of regex syntax (such as escaping certain special characters like a dollar sign or a period by using a backslash character) and bad typing I’ve come to expect:

(?i)(EUR|€|US\s?[D\$]|\$|GBP|(br[\.a-z]+)?\s?Pfund|£|RUB|₽|CHF|S?Fr|₣)

The (?i) is a switch that makes my search insensitive to case. The list is in parenthesis, which forms a group, and that can be useful sometimes. The vertical pipe characters (“|”) mean logical OR.

Germans typically write the currency markers after the amount: 55€ or 1,25 EUR, for example. Spaces can be unpredictable. And there’s also that occasional bad habit of writing dashes after the decimal separator: 100,— Euro for instance, and I won’t consider all the different Unicode variants of those damned dashes for now and whether one long dash or a couple of short ones will be used.

But sometimes in German texts, an author will put the currency marker in front of the numbers as is usually the case in English. (And some people writing in English will put the marker in English after the numbers, especially if that’s typical in their native language.)

Are you exhausted by all this yet? Here’s a little shock therapy that might give you the energy to run away and pour yourself a stiff drink:

(?i)((EUR|€|US\s?[D\$]|\$|GBP|(br[\.a-z]+)?\s?Pfund|£|RUB|₽|CHF|S?Fr|₣)\s?(\d+)|(\d+)\s?(EUR|€|US\s?[D\$]|\$|GBP|(br[\.a-z]+)?\s?Pfund|£|RUB|₽|CHF|S?Fr|₣))

Don’t panic. And don’t worry about understanding that crazy syntax. I take something like this and write it to my Regex Assistant library in memoQ like this:

Then I never think about all those details again. I just look up the expression using the plain language name, labels or search words in my description. You can do the same in a simple text file, Excel spreadsheet or whatever.

I like using test pages like the one on the Regex Storm web site for troubleshooting, especially because of the alternating highlight colors for matches in my test data or the table of regex group values offered for matches, but the latter isn’t necessary for a generalized filtering expression like we have here.

Notice that my very general regex doesn’t capture the entire currency expression in many cases, but it’s good enough, I think, to filter for segments that will show me what’s in a corpus (one or more documents I’ll be translating or checking, or maybe a translation memory, for example).

So let’s take this puppy to the park and play!

First I’ll use this on my little 2.5 million word EU legislation corpus and see what I find.

I’ve got a false positive match in Segment 1583, which I could correct for but will just ignore, and otherwise the results look good. I can see that the source text is using non-breaking spaces between amounts and currency markers, and I also see that sometimes the translators forgot those.

If I wanted to make some QA rules for texts like this, I would scroll through the results, copy out relevant examples and use those as the basis for more refined expression development work for particular purposes.

If I just want to look through a big report and visually verify that the figures in the translation look OK, I could do that too. The expression is good enough for that purpose.

My results showed a bit over 400 sentences altogether, and except for an occasional omission of the expected non-breaking space (that looks like a degree symbol when the display of non-printing characters is turned on), the source is rather uniform in the way monetary amounts are written, so the job of building QA and translation assistance tools is comparatively easy for a semi-expert.

Let’s have a look at those German travel guides now….

I look up the expression I want in my personal memoQ Regex Assistant library:

I see a few inconsistencies in the source text:

And then a few more and some false positives with abbreviated days of the week:

I had nothing to do with translations like “lunch table” by the way. Stuff like that is worth noting and recording as forbidden terminology in a term base.

But the results are still good enough for visual checks or collecting sample data for creating and texting more accurate regexes. And since I know that Swiss currency won’t show up in a German text about tourism in Portugal, I’ll just delete all the irrelevant currencies from my search expression and avoid those false positives.

And now a look at my “Big Mama”

That’s what some people call a huge translation memory database in which all or much of one’s translation work is collected. Mine has a 24 year history. And a lot of data. Hits for most of the cases covered by that big, scary general regex.

I think I’ll spare you all those details.

It’s enough to say that if I wanted to examine that TM to collect sample data for some QA rules related to the currencies in that expression or others I might be interested in. I’ll have a lot of helpful cases and probably some good insight into false positives I might want to account for in my development work.

Translation memories from particular clients or working groups are invaluable sources of information for creating appropriate rules for quality checking or collecting enough data that discussions of possible style guides or advice for writing teams can be truly useful.

If a translation agency asks for help with this sort of thing, I never take their word for what the source data really look like. They might think they know, but when you start digging into the real data a different picture often emerges. And then you can make something that helps with the actual state of matters.

I’ve done this many times, in many languages for individuals, teams and companies. And many times I hear how a simple thing like that regex used in the search examples above or a little auto-translation ruleset saves hours or days of awful, boring work on a big project.

Any translator, reviewer, quality checker or language service project manager can make good of tools like these without knowing any regular expressions syntax. The important thing here is organizing the solutions in an accessible way and recognizing situations where they can be used.

I have a lot of published information and resources for challenges like these, and I can help or recommend appropriate experts in most cases presented to me. All questions are welcome.

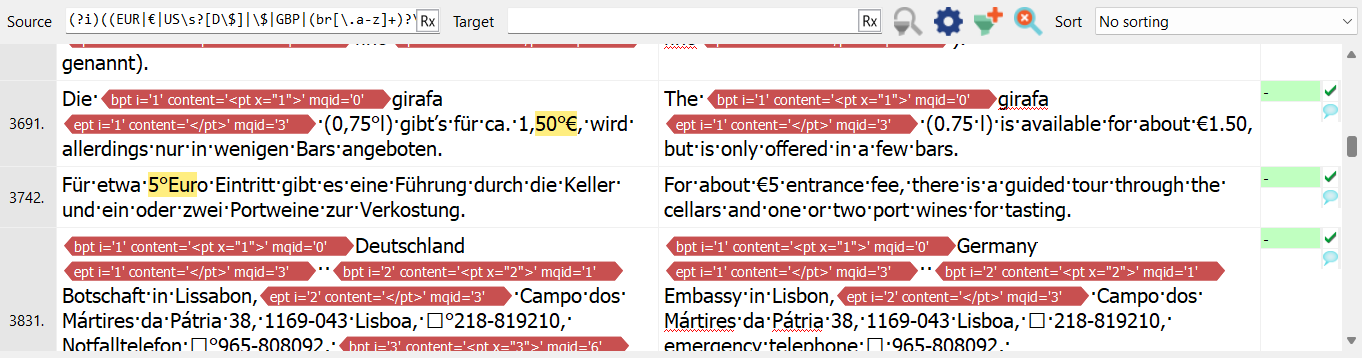

It's probably worth noting that for screening/filtering purposes, all the currency symbols ($, £, €, ₽ and so on) could in fact be replaced by the character class designation "\p{Sc}". To learn more about Unicode character classes in .NET regex (the dialect on which the implementation in memoQ is based), see https://learn.microsoft.com/en-us/dotnet/standard/base-types/character-classes-in-regular-expressions